Differential entropy (also referred to as continuous entropy) is a concept in information theory that began as an attempt by Claude Shannon to extend the...

23 KB (2,842 words) - 23:31, 21 April 2025

that entropy should be a measure of how informative the average outcome of a variable is. For a continuous random variable, differential entropy is analogous...

72 KB (10,210 words) - 18:29, 22 April 2025

In information theory, the conditional entropy quantifies the amount of information needed to describe the outcome of a random variable Y {\displaystyle...

11 KB (2,069 words) - 09:58, 31 March 2025

in which case we say that the differential entropy is not defined. As in the discrete case the joint differential entropy of a set of random variables...

7 KB (998 words) - 07:21, 18 April 2025

the discrete entropy. It is known since then that the differential entropy may differ from the infinitesimal limit of the discrete entropy by an infinite...

245 KB (40,560 words) - 10:00, 10 April 2025

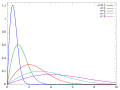

with probability density p ( x ) {\displaystyle p(x)} , then the differential entropy of X {\displaystyle X} is defined as H ( X ) = − ∫ − ∞ ∞ p ( x )...

36 KB (4,479 words) - 17:16, 8 April 2025

Negentropy (redirect from Negative entropy)

_{x})-S(p_{x})\,} where S ( φ x ) {\displaystyle S(\varphi _{x})} is the differential entropy of the Gaussian density with the same mean and variance as p x {\displaystyle...

10 KB (1,107 words) - 01:03, 3 December 2024

d-dimensional entropy does not necessarily exist there. Finally, dimensional-rate bias generalizes the Shannon's entropy and differential entropy, as one could...

16 KB (3,108 words) - 02:43, 2 June 2024

In information theory, the cross-entropy between two probability distributions p {\displaystyle p} and q {\displaystyle q} , over the same underlying...

19 KB (3,264 words) - 23:00, 21 April 2025

properties; for example, differential entropy may be negative. The differential analogies of entropy, joint entropy, conditional entropy, and mutual information...

12 KB (2,183 words) - 15:35, 22 December 2024

with finite differential entropy, R ( D ) ≥ h ( X ) − h ( D ) {\displaystyle R(D)\geq h(X)-h(D)\,} where h(D) is the differential entropy of a Gaussian...

15 KB (2,327 words) - 09:59, 31 March 2025

learning, and time delay estimation it is useful to estimate the differential entropy of a system or process, given some observations. The simplest and...

10 KB (1,415 words) - 07:41, 28 April 2025

Rayleigh distribution (section Differential entropy)

{\displaystyle \operatorname {erf} (z)} is the error function. The differential entropy is given by[citation needed] H = 1 + ln ( σ 2 ) + γ 2 {\displaystyle...

16 KB (2,251 words) - 04:19, 13 February 2025

entropy states that the probability distribution which best represents the current state of knowledge about a system is the one with largest entropy,...

31 KB (4,196 words) - 01:16, 21 March 2025

vector, it is distributed as a generalized chi-squared variable. The differential entropy of the multivariate normal distribution is h ( f ) = − ∫ − ∞ ∞ ∫...

65 KB (9,594 words) - 15:19, 3 May 2025

its time series X1, ..., Xn is i.i.d. with entropy H(X) in the discrete-valued case and differential entropy in the continuous-valued case. The Source...

12 KB (1,881 words) - 01:33, 23 January 2025

Shannon for differential entropy. It was formulated by Edwin Thompson Jaynes to address defects in the initial definition of differential entropy. Shannon...

6 KB (966 words) - 18:36, 24 February 2025

Kullback–Leibler divergence (redirect from Kullback–Leibler entropy)

distributions, for which Shannon entropy ceases to be so useful (see differential entropy), but the relative entropy continues to be just as relevant...

77 KB (13,054 words) - 15:51, 28 April 2025

mathematical theory of probability, the entropy rate or source information rate is a function assigning an entropy to a stochastic process. For a strongly...

5 KB (784 words) - 18:08, 6 November 2024

V, Gilson MK (March 2010). "Thermodynamic and Differential Entropy under a Change of Variables". Entropy. 12 (3): 578–590. Bibcode:2010Entrp..12..578H...

3 KB (408 words) - 12:01, 19 July 2022

quantities include the Rényi entropy and the Tsallis entropy (generalizations of the concept of entropy), differential entropy (a generalization of quantities...

63 KB (7,878 words) - 23:56, 25 April 2025

Entropy (information theory), also called Shannon entropy, a measure of the unpredictability or information content of a message source Differential entropy...

5 KB (707 words) - 12:45, 16 February 2025

Mutual information (redirect from Mutual entropy)

variable. The concept of mutual information is intimately linked to that of entropy of a random variable, a fundamental notion in information theory that quantifies...

57 KB (8,730 words) - 09:58, 31 March 2025

the new distribution. The differential entropy of the half-normal distribution is exactly one bit less the differential entropy of a zero-mean normal distribution...

9 KB (1,160 words) - 17:27, 17 March 2025

I ( X ; Y ) {\displaystyle I(X;Y)} , writing it in terms of the differential entropy: I ( X ; Y ) = h ( Y ) − h ( Y ∣ X ) = h ( Y ) − h ( X + Z ∣ X )...

15 KB (2,962 words) - 11:59, 26 October 2023

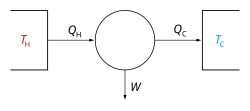

Boltzmann equation, which is a partial differential equation) is a probability equation relating the entropy S {\displaystyle S} , also written as S...

13 KB (1,572 words) - 14:49, 26 March 2025

distribution with λ = 1/μ has the largest differential entropy. In other words, it is the maximum entropy probability distribution for a random variate...

43 KB (6,647 words) - 17:34, 15 April 2025

Clausius named the concept of "the differential of a quantity which depends on the configuration of the system", entropy (Entropie) after the Greek word...

109 KB (14,065 words) - 16:02, 30 April 2025

Entropy is one of the few quantities in the physical sciences that require a particular direction for time, sometimes called an arrow of time. As one...

34 KB (5,136 words) - 15:56, 28 February 2025

whereas for continuous random variables the related concept of differential entropy, written h ( X ) {\displaystyle h(X)} , is used (see Cover and Thomas...

12 KB (1,762 words) - 22:37, 8 November 2024