Seq2seq is a family of machine learning approaches used for natural language processing. Applications include language translation, image captioning,...

23 KB (2,946 words) - 06:00, 19 May 2025

language models. At the time, the focus of the research was on improving Seq2seq techniques for machine translation, but the authors go further in the paper...

15 KB (3,910 words) - 20:36, 1 May 2025

showed that GRUs are neither better nor worse than LSTMs for seq2seq. These early seq2seq models had no attention mechanism, and the state vector is accessible...

106 KB (13,108 words) - 21:15, 5 June 2025

mechanism is the "causally masked self-attention". Recurrent neural network seq2seq Transformer (deep learning architecture) Attention Dynamic neural network...

35 KB (3,427 words) - 11:03, 10 June 2025

established with colleagues from Google. He co-invented the doc2vec and seq2seq models in natural language processing. Le also initiated and lead the AutoML...

10 KB (796 words) - 07:40, 10 June 2025

of Electrical Engineering and Computer Science. Vinyals co-invented the seq2seq model for machine translation along with Ilya Sutskever and Quoc Viet Le...

7 KB (516 words) - 23:20, 25 May 2025

2016. Because it preceded the existence of transformers, it was done by seq2seq deep LSTM networks. At the 2017 NeurIPS conference, Google researchers...

113 KB (11,789 words) - 13:20, 9 June 2025

Neural machine translation (section seq2seq)

Survey. Attention (machine learning) Transformer (machine learning model) Seq2seq Koehn, Philipp (2020). Neural Machine Translation. Cambridge University...

36 KB (3,901 words) - 13:08, 9 June 2025

ResNet behaves like an open-gated Highway Net. During the 2010s, the seq2seq model was developed, and attention mechanisms were added. It led to the...

169 KB (17,641 words) - 00:21, 11 June 2025

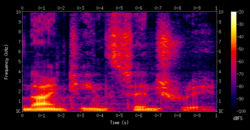

synthesis, spectrogram (or spectrogram in mel scale) is first predicted by a seq2seq model, then the spectrogram is fed to a neural vocoder to derive the synthesized...

20 KB (2,187 words) - 01:54, 24 May 2025

most commonly cited as the originators that produced seq2seq are two papers from 2014. A seq2seq architecture employs two RNN, typically LSTM, an "encoder"...

90 KB (10,419 words) - 09:51, 27 May 2025

most commonly cited as the originators that produced seq2seq are two papers from 2014. A seq2seq architecture employs two RNN, typically LSTM, an "encoder"...

85 KB (8,625 words) - 20:54, 10 June 2025

inability to capture sequential data, which later led to developments of Seq2seq approaches, which include recurrent neural networks which made use of long...

15 KB (1,613 words) - 00:22, 7 April 2025

2024-10-16. import torch from transformers import AutoConfig, AutoModelForSeq2SeqLM def count_parameters(model): enc = sum(p.numel() for p in model.encoder...

20 KB (1,932 words) - 03:55, 7 May 2025

Prefrontal cortex basal ganglia working memory Recurrent neural network Seq2seq Transformer (machine learning model) Time series Sepp Hochreiter; Jürgen...

52 KB (5,814 words) - 20:59, 10 June 2025

(or 7e21 FLOPs) of compute which was 1.5 orders of magnitude larger than Seq2seq model of 2014 (but about 2x smaller than GPT-J-6B in 2021). Google Translate's...

20 KB (1,733 words) - 07:15, 26 April 2025

retrieve the best candidates from the knowledge base, then uses the ML-driven seq2seq model re-rank the candidate responses and generate the answer. Creative...

28 KB (3,447 words) - 12:55, 26 May 2025

2022). "AlexaTM 20B: Few-Shot Learning Using a Large-Scale Multilingual Seq2Seq Model". arXiv:2208.01448 [cs.CL]. "AlexaTM 20B is now available in Amazon...

64 KB (3,361 words) - 16:05, 24 May 2025

acronym stands for "Language Model for Dialogue Applications". Built on the seq2seq architecture, transformer-based neural networks developed by Google Research...

39 KB (2,966 words) - 21:40, 29 May 2025

the development of advanced neural network techniques, especially the Seq2Seq model, and the availability of powerful computational resources, neural...

24 KB (2,859 words) - 21:27, 24 April 2024

end to end neural network architecture known as sequence to sequence (seq2seq) which uses two recurrent neural networks (RNN). An encoder RNN and a decoder...

25 KB (3,068 words) - 15:39, 30 May 2025

learning List of datasets for machine learning research Predictive analytics Seq2seq Fairness (machine learning) Embedding, for other kinds of embeddings Book...

19 KB (2,404 words) - 18:07, 10 February 2025

"Next Location Prediction with a Graph Convolutional Network Based on a Seq2seq Framework". KSII Transactions on Internet and Information Systems. 14 (5)...

19 KB (1,961 words) - 08:21, 7 December 2023

with a one-hot distribution over the vocabulary, while autoregressive and seq2seq models generate new text based on the source predicting one word at a time...

24 KB (2,939 words) - 16:55, 9 June 2025