optimization, the revised simplex method is a variant of George Dantzig's simplex method for linear programming. The revised simplex method is mathematically...

11 KB (1,447 words) - 08:04, 11 February 2025

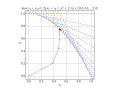

In mathematical optimization, Dantzig's simplex algorithm (or simplex method) is a popular algorithm for linear programming.[failed verification] The name...

42 KB (6,261 words) - 14:30, 16 June 2025

HiGHS optimization solver (category Optimization algorithms and methods)

in July 2022. HiGHS has implementations of the primal and dual revised simplex method for solving LP problems, based on techniques described by Hall and...

16 KB (1,190 words) - 05:02, 29 June 2025

The Nelder–Mead method (also downhill simplex method, amoeba method, or polytope method) is a numerical method used to find the minimum or maximum of an...

17 KB (2,379 words) - 16:52, 25 April 2025

contrast to the simplex method, which has exponential run-time in the worst case. Practically, they run as fast as the simplex method—in contrast to the...

30 KB (4,691 words) - 00:20, 20 June 2025

GNU General Public License. GLPK uses the revised simplex method and the primal-dual interior point method for non-integer problems and the branch-and-bound...

4 KB (336 words) - 09:34, 6 April 2025

research, the Big M method is a method of solving linear programming problems using the simplex algorithm. The Big M method extends the simplex algorithm to...

6 KB (871 words) - 10:03, 13 May 2025

ISBN 978-3-642-04044-3. Evans, J.; Steuer, R. (1973). "A Revised Simplex Method for Linear Multiple Objective Programs". Mathematical Programming...

49 KB (5,970 words) - 21:20, 8 June 2025

Criss-cross algorithm (redirect from Criss-cross method)

on-the-fly calculated parts of a tableau, if implemented like the revised simplex method). In a general step, if the tableau is primal or dual infeasible...

24 KB (2,432 words) - 17:42, 23 June 2025

the process is repeated until an integer solution is found. Using the simplex method to solve a linear program produces a set of equations of the form x...

10 KB (1,546 words) - 09:57, 10 December 2023

problems as linear programs and gave a solution very similar to the later simplex method. Hitchcock had died in 1957, and the Nobel Memorial Prize is not awarded...

61 KB (6,690 words) - 17:57, 6 May 2025

In numerical analysis, the Newton–Raphson method, also known simply as Newton's method, named after Isaac Newton and Joseph Raphson, is a root-finding...

70 KB (8,960 words) - 23:11, 23 June 2025

method like gradient descent, hill climbing, Newton's method, or quasi-Newton methods like BFGS, is an algorithm of an iterative method or a method of...

11 KB (1,556 words) - 01:03, 20 June 2025

Lagrangian methods are a certain class of algorithms for solving constrained optimization problems. They have similarities to penalty methods in that they...

15 KB (1,940 words) - 06:08, 22 April 2025

Line search (redirect from Line search method)

The descent direction can be computed by various methods, such as gradient descent or quasi-Newton method. The step size can be determined either exactly...

9 KB (1,339 words) - 01:59, 11 August 2024

Mathematical optimization (category Mathematical and quantitative methods (economics))

simplex algorithm that are especially suited for network optimization Combinatorial algorithms Quantum optimization algorithms The iterative methods used...

53 KB (6,155 words) - 14:53, 3 July 2025

The standard algorithm for solving linear problems at the time was the simplex algorithm, which has a run time that typically is linear in the size of...

23 KB (3,704 words) - 01:44, 24 June 2025

solved by the simplex method, which usually works in polynomial time in the problem size but is not guaranteed to, or by interior point methods which are...

13 KB (1,844 words) - 01:05, 24 May 2025

Powell's dog leg method, also called Powell's hybrid method, is an iterative optimisation algorithm for the solution of non-linear least squares problems...

6 KB (879 words) - 07:48, 13 December 2024

Rosenbrock methods refers to either of two distinct ideas in numerical computation, both named for Howard H. Rosenbrock. Rosenbrock methods for stiff differential...

3 KB (295 words) - 13:12, 24 July 2024

Integer programming (section Heuristic methods)

an ILP is totally unimodular, rather than use an ILP algorithm, the simplex method can be used to solve the LP relaxation and the solution will be integer...

30 KB (4,226 words) - 01:54, 24 June 2025

Levenberg–Marquardt algorithm (redirect from Levenberg-Marquardt Method)

algorithm (LMA or just LM), also known as the damped least-squares (DLS) method, is used to solve non-linear least squares problems. These minimization...

22 KB (3,211 words) - 07:50, 26 April 2024

In numerical analysis, a quasi-Newton method is an iterative numerical method used either to find zeroes or to find local maxima and minima of functions...

19 KB (2,264 words) - 13:41, 30 June 2025

Bayesian optimization (category Sequential methods)

he first proposed a new method of locating the maximum point of an arbitrary multipeak curve in a noisy environment. This method provided an important theoretical...

21 KB (2,323 words) - 14:01, 8 June 2025

Greedy algorithm (redirect from Greedy method)

problem class, it typically becomes the method of choice because it is faster than other optimization methods like dynamic programming. Examples of such...

17 KB (1,918 words) - 19:59, 19 June 2025

Dynamic programming (category Optimization algorithms and methods)

Dynamic programming is both a mathematical optimization method and an algorithmic paradigm. The method was developed by Richard Bellman in the 1950s and has...

59 KB (9,166 words) - 15:39, 12 June 2025

Gradient descent (redirect from Gradient descent method)

Gradient descent is a method for unconstrained mathematical optimization. It is a first-order iterative algorithm for minimizing a differentiable multivariate...

39 KB (5,600 words) - 14:21, 20 June 2025

Dantzig–Wolfe decomposition (category Decomposition methods)

large-scale linear programs. For most linear programs solved via the revised simplex algorithm, at each step, most columns (variables) are not in the basis...

7 KB (891 words) - 22:53, 16 March 2024

Frank–Wolfe algorithm (redirect from Conditional gradient method)

known as the conditional gradient method, reduced gradient algorithm and the convex combination algorithm, the method was originally proposed by Marguerite...

8 KB (1,200 words) - 19:37, 11 July 2024

Metaheuristic (redirect from Meta-Heuristic Methods)

Remote Control. 26 (2): 246–253. Nelder, J.A.; Mead, R. (1965). "A simplex method for function minimization". Computer Journal. 7 (4): 308–313. doi:10...

48 KB (4,646 words) - 00:34, 24 June 2025