variables). Sparsity regularization methods focus on selecting the input variables that best describe the output. Structured sparsity regularization methods...

24 KB (3,812 words) - 20:48, 26 October 2023

regularization procedures can be divided in many ways, the following delineation is particularly helpful: Explicit regularization is regularization whenever...

30 KB (4,628 words) - 00:24, 11 July 2025

regularization problems where the regularization penalty may not be differentiable. One such example is ℓ 1 {\displaystyle \ell _{1}} regularization (also...

20 KB (3,193 words) - 21:53, 22 May 2025

matrix regularization generalizes notions of vector regularization to cases where the object to be learned is a matrix. The purpose of regularization is to...

15 KB (2,510 words) - 21:06, 14 April 2025

squares regression on the system (4) with sparsity-promoting ( L 1 {\displaystyle L_{1}} ) regularization ξ k = arg min ξ k ′ | | X ˙ k − Θ ( X ) ξ...

6 KB (895 words) - 08:07, 19 February 2025

similar to dropout as it introduces dynamic sparsity within the model, but differs in that the sparsity is on the weights, rather than the output vectors...

138 KB (15,569 words) - 19:41, 17 July 2025

Autoencoder (redirect from Sparse autoencoder)

the k-sparse autoencoder. Instead of forcing sparsity, we add a sparsity regularization loss, then optimize for min θ , ϕ L ( θ , ϕ ) + λ L sparse ( θ ...

51 KB (6,540 words) - 07:38, 7 July 2025

Structural equation modeling Structural risk minimization Structured sparsity regularization Structured support vector machine Subclass reachability Sufficient...

39 KB (3,385 words) - 07:36, 7 July 2025

also Lasso, LASSO or L1 regularization) is a regression analysis method that performs both variable selection and regularization in order to enhance the...

52 KB (8,057 words) - 00:46, 6 July 2025

shrinkage. There are several variations to the basic sparse approximation problem. Structured sparsity: In the original version of the problem, any of the...

15 KB (2,212 words) - 02:22, 11 July 2025

Compressed sensing (redirect from Sparse recovery)

under which recovery is possible. The first one is sparsity, which requires the signal to be sparse in some domain. The second one is incoherence, which...

46 KB (5,874 words) - 16:00, 4 May 2025

kernel Predictive analytics Regularization perspectives on support vector machines Relevance vector machine, a probabilistic sparse-kernel model identical...

65 KB (9,071 words) - 09:49, 24 June 2025

Multi-task learning (section Known task structure)

learning works because regularization induced by requiring an algorithm to perform well on a related task can be superior to regularization that prevents overfitting...

43 KB (6,154 words) - 20:44, 10 July 2025

Manifold regularization adds a second regularization term, the intrinsic regularizer, to the ambient regularizer used in standard Tikhonov regularization. Under...

28 KB (3,875 words) - 18:54, 10 July 2025

successfully used RLHF for this goal have noted that the use of KL regularization in RLHF, which aims to prevent the learned policy from straying too...

62 KB (8,617 words) - 19:50, 11 May 2025

and other metrics. Regularization perspectives on support-vector machines interpret SVM as a special case of Tikhonov regularization, specifically Tikhonov...

10 KB (1,475 words) - 06:07, 17 April 2025

for efficient computation Parallel tree structure boosting with sparsity Efficient cacheable block structure for decision tree training XGBoost works...

14 KB (1,323 words) - 09:51, 14 July 2025

codes. The regularization and kernel theory literature for vector-valued functions followed in the 2000s. While the Bayesian and regularization perspectives...

26 KB (4,220 words) - 13:17, 1 May 2025

language model. Skip-gram language model is an attempt at overcoming the data sparsity problem that the preceding model (i.e. word n-gram language model) faced...

17 KB (2,424 words) - 11:12, 19 July 2025

Bayesian statistics of graphical models, false discovery rates, and regularization. She is the Louis Block Professor of statistics at the University of...

7 KB (471 words) - 22:06, 1 May 2025

through future improvements like better culling approaches, antialiasing, regularization, and compression techniques. Extending 3D Gaussian splatting to dynamic...

15 KB (1,606 words) - 21:08, 19 July 2025

\mathbf {\Gamma } } . The local sparsity constraint allows stronger uniqueness and stability conditions than the global sparsity prior, and has shown to be...

38 KB (6,082 words) - 09:32, 29 May 2024

low-dimensional structure is needed for successful covariance matrix estimation in high dimensions. Examples of such structures include sparsity, low rankness...

20 KB (2,559 words) - 15:42, 4 October 2024

the training corpus. During training, regularization loss is also used to stabilize training. However regularization loss is usually not used during testing...

133 KB (14,140 words) - 09:55, 21 July 2025

representation error (over the input data), together with L1 regularization on the weights to enable sparsity (i.e., the representation of each data point has only...

45 KB (5,114 words) - 09:22, 4 July 2025

different formulation for numerical computation in order to take advantage of sparse matrix methods (e.g. lme4 and MixedModels.jl). In the context of Bayesian...

23 KB (2,888 words) - 16:45, 25 June 2025

L1 regularization (akin to Lasso) is added to NMF with the mean squared error cost function, the resulting problem may be called non-negative sparse coding...

68 KB (7,783 words) - 02:31, 2 June 2025

forms the conceptual basis for regression regularization methods such as LASSO and ridge regression. Regularization methods introduce bias into the regression...

31 KB (4,228 words) - 02:47, 4 July 2025

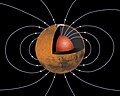

stripes. Using sparse solutions (e.g., L1 regularization) of crustal-field measurements instead of smoothing solutions (e.g., L2 regularization) shows highly...

22 KB (2,148 words) - 20:06, 19 June 2025

completion problem is an application of matrix regularization which is a generalization of vector regularization. For example, in the low-rank matrix completion...

39 KB (6,402 words) - 08:00, 12 July 2025