Entropy is a scientific concept, most commonly associated with states of disorder, randomness, or uncertainty. The term and the concept are used in diverse...

111 KB (14,230 words) - 02:57, 22 May 2025

In information theory, the entropy of a random variable quantifies the average level of uncertainty or information associated with the variable's potential...

72 KB (10,264 words) - 06:07, 14 May 2025

Second law of thermodynamics (redirect from Law of Entropy)

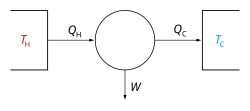

process." The second law of thermodynamics establishes the concept of entropy as a physical property of a thermodynamic system. It predicts whether processes...

107 KB (15,472 words) - 01:32, 4 May 2025

Look up entropy in Wiktionary, the free dictionary. Entropy is a scientific concept that is most commonly associated with a state of disorder, randomness...

5 KB (707 words) - 12:45, 16 February 2025

In information theory, the cross-entropy between two probability distributions p {\displaystyle p} and q {\displaystyle q} , over the same underlying...

19 KB (3,264 words) - 23:00, 21 April 2025

In information theory, an entropy coding (or entropy encoding) is any lossless data compression method that attempts to approach the lower bound declared...

4 KB (478 words) - 02:27, 14 May 2025

In chemical kinetics, the entropy of activation of a reaction is one of the two parameters (along with the enthalpy of activation) that are typically...

4 KB (551 words) - 09:21, 27 December 2024

Rényi entropy is a quantity that generalizes various notions of entropy, including Hartley entropy, Shannon entropy, collision entropy, and min-entropy. The...

22 KB (3,526 words) - 01:18, 25 April 2025

and electrical engineering. A key measure in information theory is entropy. Entropy quantifies the amount of uncertainty involved in the value of a random...

64 KB (7,976 words) - 13:50, 10 May 2025

The entropy unit is a non-S.I. unit of thermodynamic entropy, usually denoted by "e.u." or "eU" and equal to one calorie per kelvin per mole, or 4.184...

521 bytes (72 words) - 04:45, 6 November 2024

In physics, the von Neumann entropy, named after John von Neumann, is a measure of the statistical uncertainty within a description of a quantum system...

35 KB (5,061 words) - 13:27, 1 March 2025

Black hole thermodynamics (redirect from Black hole entropy)

law of thermodynamics requires that black holes have entropy. If black holes carried no entropy, it would be possible to violate the second law by throwing...

25 KB (3,223 words) - 18:21, 16 February 2025

In information theory, the conditional entropy quantifies the amount of information needed to describe the outcome of a random variable Y {\displaystyle...

11 KB (2,142 words) - 15:57, 16 May 2025

mathematical theory of probability, the entropy rate or source information rate is a function assigning an entropy to a stochastic process. For a strongly...

5 KB (784 words) - 18:08, 6 November 2024

Kullback–Leibler divergence (redirect from Kullback–Leibler entropy)

statistics, the Kullback–Leibler (KL) divergence (also called relative entropy and I-divergence), denoted D KL ( P ∥ Q ) {\displaystyle D_{\text{KL}}(P\parallel...

77 KB (13,054 words) - 16:34, 16 May 2025

entropy states that the probability distribution which best represents the current state of knowledge about a system is the one with largest entropy,...

31 KB (4,196 words) - 01:16, 21 March 2025

entropy is a sociological theory that evaluates social behaviours using a method based on the second law of thermodynamics. The equivalent of entropy...

2 KB (191 words) - 02:22, 20 December 2024

Boltzmann constant (redirect from Dimensionless entropy)

gas constant, in Planck's law of black-body radiation and Boltzmann's entropy formula, and is used in calculating thermal noise in resistors. The Boltzmann...

26 KB (2,946 words) - 02:00, 12 March 2025

Differential entropy (also referred to as continuous entropy) is a concept in information theory that began as an attempt by Claude Shannon to extend...

23 KB (2,842 words) - 23:31, 21 April 2025

conditional quantum entropy is an entropy measure used in quantum information theory. It is a generalization of the conditional entropy of classical information...

4 KB (582 words) - 09:24, 6 February 2023

Heat death of the universe (redirect from Entropy death)

energy, and will therefore be unable to sustain processes that increase entropy. Heat death does not imply any particular absolute temperature; it only...

30 KB (3,520 words) - 23:37, 13 May 2025

Research concerning the relationship between the thermodynamic quantity entropy and both the origin and evolution of life began around the turn of the...

63 KB (8,442 words) - 00:31, 23 May 2025

Maximum entropy thermodynamics Maximum entropy spectral estimation Principle of maximum entropy Maximum entropy probability distribution Maximum entropy classifier...

632 bytes (99 words) - 18:19, 15 July 2022

The concept entropy was first developed by German physicist Rudolf Clausius in the mid-nineteenth century as a thermodynamic property that predicts that...

18 KB (2,621 words) - 19:57, 18 March 2025

The entropy of entanglement (or entanglement entropy) is a measure of the degree of quantum entanglement between two subsystems constituting a two-part...

9 KB (1,608 words) - 23:09, 9 May 2025

Third law of thermodynamics (section Example: Entropy change of a crystal lattice heated by an incoming photon)

The third law of thermodynamics states that the entropy of a closed system at thermodynamic equilibrium approaches a constant value when its temperature...

28 KB (3,880 words) - 22:24, 7 May 2025

In classical thermodynamics, entropy (from Greek τρoπή (tropḗ) 'transformation') is a property of a thermodynamic system that expresses the direction...

17 KB (2,587 words) - 15:45, 28 December 2024

Holographic principle (redirect from Holographic entropy bound)

bound of black hole thermodynamics, which conjectures that the maximum entropy in any region scales with the radius squared, rather than cubed as might...

32 KB (3,969 words) - 23:05, 17 May 2025

theory, the entropy power inequality (EPI) is a result that relates to so-called "entropy power" of random variables. It shows that the entropy power of...

4 KB (563 words) - 13:45, 23 April 2025

The min-entropy, in information theory, is the smallest of the Rényi family of entropies, corresponding to the most conservative way of measuring the unpredictability...

15 KB (2,716 words) - 23:06, 21 April 2025