expressions for information theory developed by Claude Shannon and Ralph Hartley in the 1940s are similar to the mathematics of statistical thermodynamics worked...

29 KB (3,734 words) - 21:43, 19 June 2025

In information theory, the entropy of a random variable quantifies the average level of uncertainty or information associated with the variable's potential...

72 KB (10,220 words) - 13:03, 6 June 2025

change and information systems including the transmission of information in telecommunication. Entropy is central to the second law of thermodynamics, which...

111 KB (14,228 words) - 21:07, 24 May 2025

thermodynamics) Entropy (energy dispersal) Entropy of mixing Entropy (order and disorder) Entropy (information theory) History of entropy Information...

18 KB (2,621 words) - 19:57, 18 March 2025

The laws of thermodynamics are a set of scientific laws which define a group of physical quantities, such as temperature, energy, and entropy, that characterize...

20 KB (2,893 words) - 00:42, 19 June 2025

Negentropy (redirect from Negative entropy)

In information theory and statistics, negentropy is used as a measure of distance to normality. It is also known as negative entropy or syntropy. The concept...

11 KB (1,225 words) - 18:26, 10 June 2025

In thermodynamics, entropy is a numerical quantity that shows that many physical processes can go in only one direction in time. For example, cream and...

33 KB (5,257 words) - 11:59, 23 March 2025

In physics, maximum entropy thermodynamics (colloquially, MaxEnt thermodynamics) views equilibrium thermodynamics and statistical mechanics as inference...

27 KB (3,612 words) - 07:30, 29 April 2025

measures in information theory are mutual information, channel capacity, error exponents, and relative entropy. Important sub-fields of information theory include...

64 KB (7,973 words) - 23:39, 4 June 2025

Maxwell's demon (category Philosophy of thermal and statistical physics)

relationship between thermodynamics and information theory. Most scientists argue that, on theoretical grounds, no device can violate the second law in this way....

39 KB (4,675 words) - 02:54, 25 May 2025

Quantum thermodynamics is the study of the relations between two independent physical theories: thermodynamics and quantum mechanics. The two independent...

40 KB (5,074 words) - 14:21, 24 May 2025

contributions by Rolf Landauer in the 1960s, are explored further in the article Entropy in thermodynamics and information theory). The publication of Shannon's...

14 KB (1,725 words) - 14:51, 25 May 2025

Non-equilibrium thermodynamics is a branch of thermodynamics that deals with physical systems that are not in thermodynamic equilibrium but can be described in terms...

51 KB (6,336 words) - 01:00, 20 June 2025

and work Entropy in thermodynamics and information theory, the relationship between thermodynamic entropy and information (Shannon) entropy Entropy (energy...

5 KB (707 words) - 12:45, 16 February 2025

energy, and coined the term entropy. Since the mid-20th century the concept of entropy has found application in the field of information theory, describing...

22 KB (3,131 words) - 19:20, 27 May 2025

Thermodynamics is a branch of physics that deals with heat, work, and temperature, and their relation to energy, entropy, and the physical properties...

48 KB (5,843 words) - 01:18, 16 June 2025

proposing a theory of history based on the second law of thermodynamics and on the principle of entropy. The 1944 book What is Life? by Nobel-laureate physicist...

63 KB (8,442 words) - 00:31, 23 May 2025

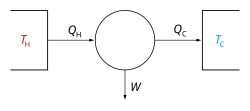

into work in a cyclic process." The second law of thermodynamics establishes the concept of entropy as a physical property of a thermodynamic system....

107 KB (15,472 words) - 00:48, 20 June 2025

cryogenics and electricity generation. The development of thermodynamics both drove and was driven by atomic theory. It also, albeit in a subtle manner...

34 KB (3,780 words) - 15:53, 31 March 2025

In information theory, the Rényi entropy is a quantity that generalizes various notions of entropy, including Hartley entropy, Shannon entropy, collision...

22 KB (3,526 words) - 01:18, 25 April 2025

Holographic principle (redirect from Holographic entropy bound)

thermodynamics, which conjectures that the maximum entropy in any region scales with the radius squared, rather than cubed as might be expected. In the...

32 KB (3,969 words) - 23:05, 17 May 2025

Landauer's principle (category Entropy and information)

limit Bekenstein bound Kolmogorov complexity Entropy in thermodynamics and information theory Information theory Jarzynski equality Limits of computation...

15 KB (1,619 words) - 19:56, 23 May 2025

Kullback–Leibler divergence (redirect from Kullback–Leibler entropy)

In mathematical statistics, the Kullback–Leibler (KL) divergence (also called relative entropy and I-divergence), denoted D KL ( P ∥ Q ) {\displaystyle...

77 KB (13,067 words) - 13:07, 12 June 2025

Orders of magnitude (data) (redirect from Information capacity of the universe)

number of atoms in 12 grams of carbon-12 isotope. See Entropy in thermodynamics and information theory. Entropy (information theory), such as the amount...

63 KB (843 words) - 20:10, 9 June 2025

concept of mutual information is intimately linked to that of entropy of a random variable, a fundamental notion in information theory that quantifies the...

56 KB (8,853 words) - 23:22, 5 June 2025

Differential entropy (also referred to as continuous entropy) is a concept in information theory that began as an attempt by Claude Shannon to extend...

23 KB (2,842 words) - 23:31, 21 April 2025

Laplace's demon (section Chaos theory)

irreversibility, entropy, and the second law of thermodynamics. In other words, Laplace's demon was based on the premise of reversibility and classical mechanics;...

12 KB (1,410 words) - 17:59, 12 April 2025

In thermodynamics, entropy is often associated with the amount of order or disorder in a thermodynamic system. This stems from Rudolf Clausius' 1862 assertion...

24 KB (3,060 words) - 17:12, 10 March 2024

bits of spacetime information. As such, entropic gravity is said to abide by the second law of thermodynamics under which the entropy of a physical system...

28 KB (3,737 words) - 18:16, 15 June 2025

identical in form to Havrda–Charvát structural α-entropy, introduced in 1967 within information theory. Given a discrete set of probabilities { p i } {\displaystyle...

24 KB (2,881 words) - 17:28, 12 June 2025