In information theory, the entropy of a random variable quantifies the average level of uncertainty or information associated with the variable's potential...

72 KB (10,220 words) - 13:03, 6 June 2025

k_{B}} is the Boltzmann constant. The defining expression for entropy in the theory of information established by Claude E. Shannon in 1948 is of the form:...

29 KB (3,734 words) - 15:19, 27 March 2025

in information theory are mutual information, channel capacity, error exponents, and relative entropy. Important sub-fields of information theory include...

64 KB (7,973 words) - 23:39, 4 June 2025

In information theory, the conditional entropy quantifies the amount of information needed to describe the outcome of a random variable Y {\displaystyle...

11 KB (2,142 words) - 15:57, 16 May 2025

arguing that the entropy of statistical mechanics and the information entropy of information theory are the same concept. Consequently, statistical mechanics...

31 KB (4,196 words) - 01:16, 21 March 2025

In information theory, joint entropy is a measure of the uncertainty associated with a set of variables. The joint Shannon entropy (in bits) of two discrete...

6 KB (1,159 words) - 16:23, 16 May 2025

In information theory, the Rényi entropy is a quantity that generalizes various notions of entropy, including Hartley entropy, Shannon entropy, collision...

22 KB (3,526 words) - 01:18, 25 April 2025

In statistics and information theory, a maximum entropy probability distribution has entropy that is at least as great as that of all other members of...

36 KB (4,479 words) - 17:16, 8 April 2025

Negentropy (redirect from Negative entropy)

In information theory and statistics, negentropy is used as a measure of distance to normality. It is also known as negative entropy or syntropy. The...

11 KB (1,225 words) - 18:26, 10 June 2025

In information theory, an entropy coding (or entropy encoding) is any lossless data compression method that attempts to approach the lower bound declared...

4 KB (478 words) - 02:27, 14 May 2025

In the mathematical theory of probability, the entropy rate or source information rate is a function assigning an entropy to a stochastic process. For...

5 KB (804 words) - 00:13, 3 June 2025

concept of mutual information is intimately linked to that of entropy of a random variable, a fundamental notion in information theory that quantifies the...

56 KB (8,853 words) - 23:22, 5 June 2025

Differential entropy (also referred to as continuous entropy) is a concept in information theory that began as an attempt by Claude Shannon to extend...

23 KB (2,842 words) - 23:31, 21 April 2025

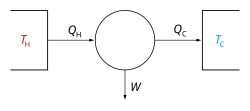

coined the term entropy. Since the mid-20th century the concept of entropy has found application in the field of information theory, describing an analogous...

22 KB (3,131 words) - 19:20, 27 May 2025

message source Differential entropy, a generalization of Entropy (information theory) to continuous random variables Entropy of entanglement, related to...

5 KB (707 words) - 12:45, 16 February 2025

entropy is an entropy measure used in quantum information theory. It is a generalization of the conditional entropy of classical information theory....

4 KB (582 words) - 09:24, 6 February 2023

uncertainty) entropy encoding entropy (information theory) Fisher information Hick's law Huffman coding information bottleneck method information theoretic...

1 KB (93 words) - 09:42, 8 August 2023

Entropic gravity, also known as emergent gravity, is a theory in modern physics that describes gravity as an entropic force—a force with macro-scale homogeneity...

28 KB (3,736 words) - 06:45, 9 May 2025

using quantum information processing techniques. Quantum information refers to both the technical definition in terms of Von Neumann entropy and the general...

42 KB (4,547 words) - 11:18, 2 June 2025

inference techniques rooted in Shannon information theory, Bayesian probability, and the principle of maximum entropy. These techniques are relevant to any...

27 KB (3,612 words) - 07:30, 29 April 2025

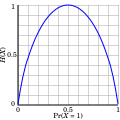

In information theory, the binary entropy function, denoted H ( p ) {\displaystyle \operatorname {H} (p)} or H b ( p ) {\displaystyle \operatorname...

6 KB (1,071 words) - 17:05, 6 May 2025

Kullback–Leibler divergence (redirect from Kullback–Leibler entropy)

distance Information gain in decision trees Information gain ratio Information theory and measure theory Jensen–Shannon divergence Quantum relative entropy Solomon...

77 KB (13,067 words) - 13:07, 12 June 2025

In statistical mechanics, entropy is formulated as a statistical property using probability theory. The statistical entropy perspective was introduced...

18 KB (2,621 words) - 19:57, 18 March 2025

science, climate change and information systems including the transmission of information in telecommunication. Entropy is central to the second law...

111 KB (14,228 words) - 21:07, 24 May 2025

Holographic principle (redirect from Holographic entropy bound)

used measure of information content, now known as Shannon entropy. As an objective measure of the quantity of information, Shannon entropy has been enormously...

32 KB (3,969 words) - 23:05, 17 May 2025

The joint quantum entropy generalizes the classical joint entropy to the context of quantum information theory. Intuitively, given two quantum states ρ...

5 KB (827 words) - 13:37, 16 August 2023

Rate–distortion theory is a major branch of information theory which provides the theoretical foundations for lossy data compression; it addresses the...

15 KB (2,327 words) - 09:59, 31 March 2025

In information theory, the cross-entropy between two probability distributions p {\displaystyle p} and q {\displaystyle q} , over the same underlying...

19 KB (3,264 words) - 23:00, 21 April 2025

Quantum Fisher information Other measures employed in information theory: Entropy (information theory) Kullback–Leibler divergence Self-information Robert, Christian...

52 KB (7,376 words) - 12:50, 8 June 2025

random variable. The Shannon information is closely related to entropy, which is the expected value of the self-information of a random variable, quantifying...

27 KB (4,445 words) - 15:34, 2 June 2025