In information theory, the conditional entropy quantifies the amount of information needed to describe the outcome of a random variable Y {\displaystyle...

11 KB (2,142 words) - 05:02, 6 July 2025

The conditional quantum entropy is an entropy measure used in quantum information theory. It is a generalization of the conditional entropy of classical...

4 KB (582 words) - 09:24, 6 February 2023

In information theory, the entropy of a random variable quantifies the average level of uncertainty or information associated with the variable's potential...

71 KB (10,208 words) - 07:29, 15 July 2025

Despite similar notation, joint entropy should not be confused with cross-entropy. The conditional entropy or conditional uncertainty of X given random...

69 KB (8,508 words) - 04:47, 12 July 2025

Shannon entropy and its quantum generalization, the von Neumann entropy, one can define a conditional version of min-entropy. The conditional quantum...

15 KB (2,719 words) - 23:06, 21 April 2025

to a stochastic process. For a strongly stationary process, the conditional entropy for latest random variable eventually tend towards this rate value...

5 KB (862 words) - 05:07, 9 July 2025

Kullback–Leibler divergence (redirect from Kullback–Leibler entropy)

statistics, the Kullback–Leibler (KL) divergence (also called relative entropy and I-divergence), denoted D KL ( P ∥ Q ) {\displaystyle D_{\text{KL}}(P\parallel...

77 KB (13,075 words) - 21:27, 5 July 2025

{H} (X_{1})+\ldots +\mathrm {H} (X_{n})} Joint entropy is used in the definition of conditional entropy: 22 H ( X | Y ) = H ( X , Y ) − H ( Y ) {\displaystyle...

6 KB (1,159 words) - 12:49, 14 June 2025

Mutual information (redirect from Mutual entropy)

{\displaystyle (x,y)} . Expressed in terms of the entropy H ( ⋅ ) {\displaystyle H(\cdot )} and the conditional entropy H ( ⋅ | ⋅ ) {\displaystyle H(\cdot |\cdot...

56 KB (8,853 words) - 23:22, 5 June 2025

In information theory, the cross-entropy between two probability distributions p {\displaystyle p} and q {\displaystyle q} , over the same underlying...

19 KB (3,264 words) - 13:57, 8 July 2025

y,z)dxdydz} . Alternatively, we may write in terms of joint and conditional entropies as I ( X ; Y | Z ) = H ( X , Z ) + H ( Y , Z ) − H ( X , Y , Z )...

11 KB (2,385 words) - 15:00, 16 May 2025

In physics, the von Neumann entropy, named after John von Neumann, is a measure of the statistical uncertainty within a description of a quantum system...

35 KB (5,061 words) - 13:27, 1 March 2025

for example, differential entropy may be negative. The differential analogies of entropy, joint entropy, conditional entropy, and mutual information are...

12 KB (2,183 words) - 21:38, 23 May 2025

joint, conditional differential entropy, and relative entropy are defined in a similar fashion. Unlike the discrete analog, the differential entropy has...

23 KB (2,842 words) - 23:31, 21 April 2025

( Y ∣ X ) {\displaystyle H(Y\mid X)} are the entropy of the output signal Y and the conditional entropy of the output signal given the input signal, respectively:...

15 KB (2,315 words) - 09:59, 31 March 2025

Neumann entropy, S(ρ) the joint quantum entropy and S(ρA|ρB) a quantum generalization of conditional entropy (not to be confused with conditional quantum...

21 KB (2,726 words) - 04:24, 10 July 2025

Logistic regression (redirect from Conditional logit analysis)

X)\end{aligned}}} where H ( Y ∣ X ) {\displaystyle H(Y\mid X)} is the conditional entropy and D KL {\displaystyle D_{\text{KL}}} is the Kullback–Leibler divergence...

127 KB (20,642 words) - 10:26, 11 July 2025

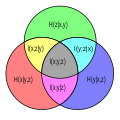

of random variables and a measure over sets. Namely the joint entropy, conditional entropy, and mutual information can be considered as the measure of a...

12 KB (1,762 words) - 22:37, 8 November 2024

the joint entropy. This is equivalent to the fact that the conditional quantum entropy may be negative, while the classical conditional entropy may never...

5 KB (827 words) - 13:37, 16 August 2023

of Secrecy Systems conditional entropy conditional quantum entropy confusion and diffusion cross-entropy data compression entropic uncertainty (Hirchman...

1 KB (93 words) - 09:42, 8 August 2023

In statistics, a maximum-entropy Markov model (MEMM), or conditional Markov model (CMM), is a graphical model for sequence labeling that combines features...

7 KB (1,025 words) - 08:23, 21 June 2025

variable e in terms of its entropy. One can then subtract the content of e that is irrelevant to h (given by its conditional entropy conditioned on h) from...

12 KB (1,725 words) - 17:42, 3 May 2025

H(X\mid Y)=-\sum _{i,j}P(x_{i},y_{j})\log P(x_{i}\mid y_{j})} is the conditional entropy, P ( e ) = P ( X ≠ X ~ ) {\displaystyle P(e)=P(X\neq {\tilde {X}})}...

8 KB (1,504 words) - 21:12, 14 April 2025

Rényi entropy is a quantity that generalizes various notions of entropy, including Hartley entropy, Shannon entropy, collision entropy, and min-entropy. The...

22 KB (3,526 words) - 01:18, 25 April 2025

identically-distributed random variable, and the operational meaning of the Shannon entropy. Named after Claude Shannon, the source coding theorem shows that, in the...

12 KB (1,866 words) - 16:09, 19 July 2025

script, and noting that the Indus script appears to have a similar conditional entropy to Old Tamil. These scholars have proposed readings of many signs;...

65 KB (6,446 words) - 01:29, 5 June 2025

Likelihood function (redirect from Conditional likelihood)

interpreted within the context of information theory. Bayes factor Conditional entropy Conditional probability Empirical likelihood Likelihood principle Likelihood-ratio...

64 KB (8,546 words) - 13:13, 3 March 2025

Information diagram (redirect from Entropy diagram)

relationships among Shannon's basic measures of information: entropy, joint entropy, conditional entropy and mutual information. Information diagrams are a useful...

3 KB (494 words) - 06:20, 4 March 2024

quantum relative entropy is a measure of distinguishability between two quantum states. It is the quantum mechanical analog of relative entropy. For simplicity...

13 KB (2,421 words) - 01:44, 14 April 2025

Information theory Entropy Differential entropy Conditional entropy Joint entropy Mutual information Directed information Conditional mutual information...

21 KB (3,087 words) - 20:03, 2 May 2025