mathematical theory of probability, the entropy rate or source information rate is a function assigning an entropy to a stochastic process. For a strongly...

5 KB (804 words) - 00:13, 3 June 2025

In information theory, the entropy of a random variable quantifies the average level of uncertainty or information associated with the variable's potential...

72 KB (10,220 words) - 13:03, 6 June 2025

} where H b {\displaystyle H_{b}} denotes the binary entropy function. Plot of the rate-distortion function for p = 0.5 {\displaystyle p=0.5} : Suppose...

15 KB (2,327 words) - 09:59, 31 March 2025

In information theory, the cross-entropy between two probability distributions p {\displaystyle p} and q {\displaystyle q} , over the same underlying...

19 KB (3,264 words) - 23:00, 21 April 2025

In information theory, the conditional entropy quantifies the amount of information needed to describe the outcome of a random variable Y {\displaystyle...

11 KB (2,142 words) - 15:57, 16 May 2025

Entropy is a scientific concept, most commonly associated with states of disorder, randomness, or uncertainty. The term and the concept are used in diverse...

111 KB (14,228 words) - 21:07, 24 May 2025

information theory. Information rate is the average entropy per symbol. For memoryless sources, this is merely the entropy of each symbol, while, in the...

64 KB (7,973 words) - 23:39, 4 June 2025

information theory, joint entropy is a measure of the uncertainty associated with a set of variables. The joint Shannon entropy (in bits) of two discrete...

6 KB (1,159 words) - 16:23, 16 May 2025

Y chromosome (section High mutation rate)

exactly 2 for no redundancy), the Y chromosome's entropy rate is only 0.84. From the definition of entropy rate, the Y chromosome has a much lower information...

81 KB (8,045 words) - 12:04, 1 June 2025

for lossless data compression is the source information rate, also known as the entropy rate. The bitrates in this section are approximately the minimum...

31 KB (3,458 words) - 21:07, 12 June 2025

In statistics and information theory, a maximum entropy probability distribution has entropy that is at least as great as that of all other members of...

36 KB (4,479 words) - 17:16, 8 April 2025

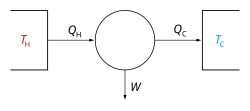

Entropy production (or generation) is the amount of entropy which is produced during heat process to evaluate the efficiency of the process. Entropy is...

27 KB (4,689 words) - 22:22, 27 April 2025

Maximum entropy spectral estimation is a method of spectral density estimation. The goal is to improve the spectral quality based on the principle of...

4 KB (600 words) - 09:55, 25 March 2025

h(X)} with probability 1. Here h ( X ) {\textstyle h(X)} is the entropy rate of the source. Similar theorems apply to other versions of LZ algorithm...

18 KB (2,566 words) - 09:26, 9 January 2025

Differential entropy (also referred to as continuous entropy) is a concept in information theory that began as an attempt by Claude Shannon to extend...

23 KB (2,842 words) - 23:31, 21 April 2025

it is synonymous with net bit rate or useful bit rate exclusive of error-correction codes. Entropy rate Information rate Punctured code Huffman, W. Cary...

2 KB (244 words) - 05:42, 12 April 2024

distribution of outgoing edges, locally maximizing entropy rate, MERW maximizes it globally (average entropy production) by sampling a uniform probability...

18 KB (2,814 words) - 01:37, 31 May 2025

( X ) {\displaystyle H(X)} or simply H {\displaystyle H} denotes the entropy rate of X {\displaystyle X} , which must exist for all discrete-time stationary...

23 KB (3,965 words) - 09:57, 31 March 2025

Rényi entropy is a quantity that generalizes various notions of entropy, including Hartley entropy, Shannon entropy, collision entropy, and min-entropy. The...

22 KB (3,526 words) - 01:18, 25 April 2025

Mutual information (redirect from Mutual entropy)

variable. The concept of mutual information is intimately linked to that of entropy of a random variable, a fundamental notion in information theory that quantifies...

56 KB (8,853 words) - 23:22, 5 June 2025

Kolmogorov complexity (redirect from Algorithmic entropy)

where H b {\displaystyle H_{b}} is the binary entropy function (not to be confused with the entropy rate). The Kolmogorov complexity function is equivalent...

59 KB (7,768 words) - 21:41, 12 June 2025

contributions to the entropy are always present, because crystals always grow at a finite rate and at temperature. However, the residual entropy is often quite...

5 KB (788 words) - 11:29, 10 February 2025

signal. Entropy Entropy production Entropy rate History of entropy Entropy of mixing Entropy (information theory) Entropy (computing) Entropy (energy dispersal)...

24 KB (3,060 words) - 17:12, 10 March 2024

of raw data, the rate of a source of information is the average entropy per symbol. For memoryless sources, this is merely the entropy of each symbol,...

8 KB (1,123 words) - 00:53, 6 December 2024

Look up entropy in Wiktionary, the free dictionary. Entropy is a scientific concept that is most commonly associated with a state of disorder, randomness...

5 KB (707 words) - 12:45, 16 February 2025

Shannon's source coding theorem (section Fixed rate lossless source coding for discrete time non-stationary independent sources)

compress such data such that the code rate (average number of bits per symbol) is less than the Shannon entropy of the source, without it being virtually...

12 KB (1,881 words) - 21:05, 11 May 2025

Measure-preserving dynamical system (redirect from Kolmogorov entropy)

crucial role in the construction of the measure-theoretic entropy of a dynamical system. The entropy of a partition Q {\displaystyle {\mathcal {Q}}} is defined...

23 KB (3,592 words) - 05:13, 10 May 2025

wavelet entropies are obtained using the definition of entropy given by Shannon. Given the complexity of the mechanisms regulating heart rate, it is reasonable...

70 KB (8,492 words) - 08:15, 25 May 2025

High-entropy alloys (HEAs) are alloys that are formed by mixing equal or relatively large proportions of (usually) five or more elements. Prior to the...

105 KB (12,696 words) - 04:09, 2 June 2025

Random walk (section Maximal entropy random walk)

[citation needed] The multiagent random walk. Random walk chosen to maximize entropy rate, has much stronger localization properties. Random walks where the direction...

56 KB (7,703 words) - 20:27, 29 May 2025