Physics-informed neural networks (PINNs), also referred to as Theory-Trained Neural Networks (TTNs), are a type of universal function approximators that...

39 KB (4,952 words) - 14:47, 29 July 2025

such as in physics-informed neural networks. Differently from traditional machine learning algorithms, such as feed-forward neural networks, convolutional...

21 KB (2,336 words) - 12:11, 19 July 2025

Deep learning (redirect from Deep neural networks)

networks, deep belief networks, recurrent neural networks, convolutional neural networks, generative adversarial networks, transformers, and neural radiance...

183 KB (18,116 words) - 23:26, 2 August 2025

integration for path integrals in order to avoid the sign problem. Physics informed neural networks have been used to solve partial differential equations in both...

20 KB (2,275 words) - 04:01, 23 July 2025

In the context of artificial neural networks, the rectifier or ReLU (rectified linear unit) activation function is an activation function defined as the...

23 KB (3,056 words) - 00:05, 21 July 2025

with physics-informed machine learning. In particular, physics-informed neural networks (PINNs) use complete physics laws to fit neural networks to solutions...

16 KB (2,106 words) - 10:14, 13 July 2025

model inspired by the structure and functions of biological neural networks. A neural network consists of connected units or nodes called artificial neurons...

168 KB (17,613 words) - 12:10, 26 July 2025

A convolutional neural network (CNN) is a type of feedforward neural network that learns features via filter (or kernel) optimization. This type of deep...

138 KB (15,555 words) - 03:37, 31 July 2025

On Neural Differential Equations (PhD). Oxford, United Kingdom: University of Oxford, Mathematical Institute. Physics-informed neural networks Steve...

7 KB (850 words) - 15:36, 10 June 2025

Partial differential equation (category Mathematical physics)

the adjacent volume, these methods conserve mass by design. Physics informed neural networks have been used to solve partial differential equations in both...

49 KB (6,800 words) - 08:09, 10 June 2025

Transformer (deep learning architecture) (redirect from Transformer (neural network))

multiplicative units. Neural networks using multiplicative units were later called sigma-pi networks or higher-order networks. LSTM became the standard...

106 KB (13,107 words) - 01:38, 26 July 2025

Feedforward refers to recognition-inference architecture of neural networks. Artificial neural network architectures are based on inputs multiplied by weights...

21 KB (2,242 words) - 18:37, 19 July 2025

PyTorch (section PyTorch neural networks)

with strong acceleration via graphics processing units (GPU) Deep neural networks built on a tape-based automatic differentiation system In 2001, Torch...

18 KB (1,540 words) - 20:40, 23 July 2025

proposed in 1986 at the annual invitation-only Snowbird Meeting on Neural Networks for Computing organized by The California Institute of Technology and...

13 KB (1,236 words) - 09:03, 19 February 2025

A neural radiance field (NeRF) is a neural field for reconstructing a three-dimensional representation of a scene from two-dimensional images. The NeRF...

21 KB (2,616 words) - 15:20, 10 July 2025

Language model (redirect from Neural net language model)

data sparsity problem. Neural networks avoid this problem by representing words as non-linear combinations of weights in a neural net. A large language...

17 KB (2,424 words) - 12:05, 30 July 2025

Memory-Augmented Neural Networks" (PDF). Google DeepMind. Retrieved 29 October 2019. Munkhdalai, Tsendsuren; Yu, Hong (2017). "Meta Networks". Proceedings...

23 KB (2,496 words) - 16:53, 17 April 2025

scaling can also be applied to deep neural network classifiers. For image classification, such as CIFAR-100, small networks like LeNet-5 have good calibration...

7 KB (831 words) - 12:21, 9 July 2025

roots in the early study of neural networks such as Jeffrey Elman's 1993 paper Learning and development in neural networks: the importance of starting...

13 KB (1,389 words) - 19:53, 17 July 2025

Artificial neural networks (ANNs) are models created using machine learning to perform a number of tasks. Their creation was inspired by biological neural circuitry...

85 KB (8,625 words) - 20:54, 10 June 2025

In artificial neural networks, recurrent neural networks (RNNs) are designed for processing sequential data, such as text, speech, and time series, where...

90 KB (10,415 words) - 12:04, 31 July 2025

Multilayer perceptron (category Neural network architectures)

linearly separable. Modern neural networks are trained using backpropagation and are colloquially referred to as "vanilla" networks. MLPs grew out of an effort...

16 KB (1,932 words) - 03:01, 30 June 2025

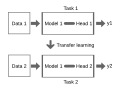

classify EMG. The experiments noted that the accuracy of neural networks and convolutional neural networks were improved through transfer learning both prior...

15 KB (1,651 words) - 02:51, 27 June 2025

Approach for Policy Learning from Trajectory Preference Queries". Advances in Neural Information Processing Systems. 25. Curran Associates, Inc. Retrieved 26...

62 KB (8,617 words) - 14:51, 3 August 2025

researchers started in 2000 to use neural networks to learn language models. Following the breakthrough of deep neural networks in image classification around...

135 KB (14,248 words) - 17:13, 3 August 2025

Machine learning (section Artificial neural networks)

machine learning, advances in the field of deep learning have allowed neural networks, a class of statistical algorithms, to surpass many previous machine...

140 KB (15,517 words) - 12:17, 3 August 2025

developed by Ian Goodfellow and his colleagues in June 2014. In a GAN, two neural networks compete with each other in the form of a zero-sum game, where one agent's...

95 KB (13,885 words) - 21:17, 2 August 2025

Gated recurrent unit (redirect from GRU neural net)

Gated recurrent units (GRUs) are a gating mechanism in recurrent neural networks, introduced in 2014 by Kyunghyun Cho et al. The GRU is like a long short-term...

9 KB (1,290 words) - 21:05, 2 August 2025

Graph neural networks (GNN) are specialized artificial neural networks that are designed for tasks whose inputs are graphs. One prominent example is molecular...

43 KB (4,802 words) - 14:49, 3 August 2025

Hence, some early neural networks bear the name Boltzmann Machine. Paul Smolensky calls − E {\displaystyle -E\,} the Harmony. A network seeks low energy...

31 KB (2,770 words) - 17:17, 16 July 2025