Sample entropy (SampEn; more appropriately K_2 entropy or Takens-Grassberger-Procaccia correlation entropy ) is a modification of approximate entropy...

9 KB (1,407 words) - 19:28, 24 May 2025

In information theory, the entropy of a random variable quantifies the average level of uncertainty or information associated with the variable's potential...

71 KB (10,189 words) - 07:29, 15 July 2025

Differential entropy (also referred to as continuous entropy) is a concept in information theory that began as an attempt by Claude Shannon to extend...

23 KB (2,842 words) - 23:31, 21 April 2025

In statistics, an approximate entropy (ApEn) is a technique used to quantify the amount of regularity and the unpredictability of fluctuations over time-series...

16 KB (2,695 words) - 18:40, 7 July 2025

the true cross-entropy, where the test set is treated as samples from p ( x ) {\displaystyle p(x)} .[citation needed] The cross entropy arises in classification...

19 KB (3,272 words) - 17:36, 22 July 2025

Kullback–Leibler divergence (redirect from Kullback–Leibler entropy)

distributions P and Q defined on the same sample space, X {\displaystyle {\mathcal {X}}} , the relative entropy from Q to P is defined to be D KL ( P ∥...

77 KB (13,075 words) - 21:27, 5 July 2025

The conditional entropy measures the average uncertainty Bob has about Alice's state upon sampling from his own system. The min-entropy can be interpreted...

15 KB (2,716 words) - 23:06, 21 April 2025

pointwise correlation dimension, approximate entropy, sample entropy, multiscale entropy analysis, sample asymmetry and memory length (based on inverse...

70 KB (8,497 words) - 17:58, 23 July 2025

"Empirical Likelihood Ratios Applied to Goodness-of-Fit Tests Based on Sample Entropy". Computational Statistics and Data Analysis. 54 (2): 531–545. doi:10...

9 KB (1,150 words) - 17:39, 20 September 2024

Entropy is a scientific concept, most commonly associated with states of disorder, randomness, or uncertainty. The term and the concept are used in diverse...

111 KB (14,220 words) - 03:00, 30 June 2025

entropy states that the probability distribution which best represents the current state of knowledge about a system is the one with largest entropy,...

31 KB (4,332 words) - 03:15, 1 July 2025

the sample size and the size of the alphabet of the probability distribution. The histogram approach uses the idea that the differential entropy of a...

10 KB (1,415 words) - 07:41, 28 April 2025

Hardware random number generator (redirect from Entropy pool)

generates random numbers from a physical process capable of producing entropy, unlike a pseudorandom number generator (PRNG) that utilizes a deterministic...

28 KB (3,305 words) - 08:22, 16 June 2025

Cauchy distribution (section Entropy)

S2CID 231728407. Vasicek, Oldrich (1976). "A Test for Normality Based on Sample Entropy". Journal of the Royal Statistical Society, Series B. 38 (1): 54–59...

46 KB (6,910 words) - 18:35, 11 July 2025

The cross-entropy (CE) method is a Monte Carlo method for importance sampling and optimization. It is applicable to both combinatorial and continuous...

7 KB (1,085 words) - 19:50, 23 April 2025

Kolmogorov complexity (redirect from Algorithmic entropy)

distance Manifold hypothesis Solomonoff's theory of inductive inference Sample entropy However, an s with K(s) = n need not exist for every n. For example...

60 KB (7,896 words) - 07:35, 21 July 2025

The concept entropy was first developed by German physicist Rudolf Clausius in the mid-nineteenth century as a thermodynamic property that predicts that...

18 KB (2,621 words) - 19:57, 18 March 2025

In information theory, the conditional entropy quantifies the amount of information needed to describe the outcome of a random variable Y {\displaystyle...

11 KB (2,142 words) - 05:02, 6 July 2025

Analysis 62, 1–23. Vasicek, Oldrich (1976). "A Test for Normality Based on Sample Entropy". Journal of the Royal Statistical Society. Series B (Methodological)...

12 KB (1,627 words) - 12:37, 9 June 2025

2000). "Physiological time-series analysis using approximate entropy and sample entropy". American Journal of Physiology. Heart and Circulatory Physiology...

14 KB (1,415 words) - 02:00, 18 July 2025

Correlation entropy Approximate entropy Sample entropy Fourier entropy [uk] Wavelet entropy Dispersion entropy Fluctuation dispersion entropy Rényi entropy Higher-order...

49 KB (5,826 words) - 13:24, 3 August 2025

Perplexity (category Entropy and information)

probability. The perplexity is the exponentiation of the entropy, a more commonly encountered quantity. Entropy measures the expected or "average" number of bits...

13 KB (1,895 words) - 17:29, 22 July 2025

In statistics, Gibbs sampling or a Gibbs sampler is a Markov chain Monte Carlo (MCMC) algorithm for sampling from a specified multivariate probability...

37 KB (6,064 words) - 02:46, 9 August 2025

five oxide precursors will result in a multi-phase sample, suggesting that configurational entropy stabilizes the material. It can clearly be seen from...

41 KB (4,233 words) - 11:38, 2 August 2025

Diversity index (redirect from Entropy (ecology))

proportional abundance of each class under a weighted geometric mean. The Rényi entropy, which adds the ability to freely vary the kind of weighted mean used....

25 KB (3,455 words) - 20:40, 17 July 2025

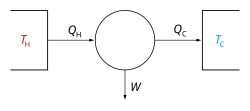

Second law of thermodynamics (redirect from Law of Entropy)

process." The second law of thermodynamics establishes the concept of entropy as a physical property of a thermodynamic system. It predicts whether processes...

108 KB (15,568 words) - 04:28, 26 July 2025

Differential pulse-code modulation (section Option 1: difference between two consecutive quantized samples)

entropy coded because the entropy of the difference signal is much smaller than that of the original discrete signal treated as independent samples....

3 KB (357 words) - 23:53, 5 December 2024

High-entropy alloys (HEAs) are alloys that are formed by mixing equal or relatively large proportions of (usually) five or more elements. Prior to the...

106 KB (12,696 words) - 10:50, 8 July 2025

conditional entropy of T {\displaystyle T} given the value of attribute a {\displaystyle a} . This is intuitively plausible when interpreting entropy Η as a...

21 KB (3,032 words) - 10:59, 9 June 2025

current density or flux of entropy. The given sample is cooled down to (almost) absolute zero (for example by submerging the sample in liquid helium). At absolute...

43 KB (3,620 words) - 22:17, 23 June 2025