In information theory, the entropy of a random variable quantifies the average level of uncertainty or information associated with the variable's potential...

71 KB (10,208 words) - 07:29, 15 July 2025

In information theory, an entropy coding (or entropy encoding) is any lossless data compression method that attempts to approach the lower bound declared...

4 KB (478 words) - 14:12, 18 June 2025

In mathematics and theoretical computer science, entropy compression is an information theoretic method for proving that a random process terminates,...

10 KB (1,387 words) - 07:22, 27 December 2024

coding achieves compression rates close to the best possible for a particular statistical model, which is given by the information entropy, whereas Huffman...

34 KB (4,155 words) - 04:20, 2 March 2025

LZ77 and LZ78 (redirect from Data compression/LZ77 78)

information entropy is developed for individual sequences (as opposed to probabilistic ensembles). This measure gives a bound on the data compression ratio...

18 KB (2,566 words) - 09:26, 9 January 2025

Huffman coding (redirect from Data compression/Huffman coding)

decreases) compression. As the size of the block approaches infinity, Huffman coding theoretically approaches the entropy limit, i.e., optimal compression. However...

36 KB (4,569 words) - 16:10, 24 June 2025

Arithmetic coding (redirect from Data compression/Arithmetic coding)

Arithmetic coding (AC) is a form of entropy encoding used in lossless data compression. Normally, a string of characters is represented using a fixed number...

41 KB (5,380 words) - 17:26, 12 June 2025

Image compression is a type of data compression applied to digital images, to reduce their cost for storage or transmission. Algorithms may take advantage...

19 KB (2,109 words) - 04:21, 30 May 2025

the same as considering absolute entropy (corresponding to data compression) as a special case of relative entropy (corresponding to data differencing)...

68 KB (7,556 words) - 07:48, 8 July 2025

Entropy is a scientific concept, most commonly associated with states of disorder, randomness, or uncertainty. The term and the concept are used in diverse...

111 KB (14,228 words) - 03:00, 30 June 2025

dictionary-matching stage (LZ77), and unlike other common compression algorithms does not combine it with an entropy coding stage (e.g. Huffman coding in DEFLATE)...

7 KB (641 words) - 03:34, 24 March 2025

Information theory (category Data compression)

Conditional entropy Covert channel Data compression Decoder Differential entropy Fungible information Information fluctuation complexity Information entropy Joint...

69 KB (8,508 words) - 04:47, 12 July 2025

Shannon's source coding theorem (category Data compression)

data compression for data whose source is an independent identically-distributed random variable, and the operational meaning of the Shannon entropy. Named...

12 KB (1,881 words) - 21:05, 11 May 2025

Golomb coding (category Entropy coding)

Rice coding is used as the entropy encoding stage in a number of lossless image compression and audio data compression methods. Golomb coding uses a...

18 KB (2,610 words) - 16:51, 7 June 2025

Incremental encoding (redirect from Front compression)

codes. It may be combined with other general lossless data compression techniques such as entropy encoding and dictionary coders to compress the remaining...

2 KB (238 words) - 00:48, 6 December 2024

Kullback–Leibler divergence (redirect from Kullback–Leibler entropy)

statistics, the Kullback–Leibler (KL) divergence (also called relative entropy and I-divergence), denoted D KL ( P ∥ Q ) {\displaystyle D_{\text{KL}}(P\parallel...

77 KB (13,075 words) - 21:27, 5 July 2025

In data compression, a universal code for integers is a prefix code that maps the positive integers onto binary codewords, with the additional property...

7 KB (988 words) - 21:49, 11 June 2025

In information technology, lossy compression or irreversible compression is the class of data compression methods that uses inexact approximations and...

26 KB (3,269 words) - 15:54, 15 June 2025

Adiabatic process (redirect from Adiabatic compression)

of the position of the gas is reduced, and seemingly would reduce the entropy of the system, but the temperature of the system will rise as the process...

44 KB (6,355 words) - 21:46, 24 June 2025

values may be confused Entropy encoding, data compression strategies to produce a code length equal to the entropy of a message Entropy (computing), an indicator...

5 KB (707 words) - 12:45, 16 February 2025

Isentropic process (redirect from Isentropic compression)

compression that entails work done on or by the flow. For an isentropic flow, entropy density can vary between different streamlines. If the entropy density...

15 KB (2,097 words) - 00:23, 18 July 2025

Asymmetric numeral systems (redirect from Finite State Entropy)

(ANS) is a family of entropy encoding methods introduced by Jarosław (Jarek) Duda from Jagiellonian University, used in data compression since 2014 due to...

29 KB (3,723 words) - 13:35, 13 July 2025

non-randomness or data compression; thus this interpretation also applies to this index. In addition, interpretation of biodiversity as entropy has also been proposed...

6 KB (1,006 words) - 03:29, 30 November 2024

Context-adaptive binary arithmetic coding (category Entropy coding)

is a form of entropy encoding used in the H.264/MPEG-4 AVC and High Efficiency Video Coding (HEVC) standards. It is a lossless compression technique, although...

13 KB (1,634 words) - 00:03, 21 December 2024

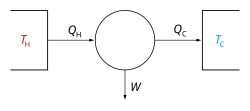

Carnot cycle (section The temperature–entropy diagram)

converted to the work done by the system. The cycle is reversible, and entropy is conserved, merely transferred between the thermal reservoirs and the...

25 KB (3,234 words) - 12:59, 16 July 2025

Context-adaptive variable-length coding (category Entropy coding)

form of entropy coding used in H.264/MPEG-4 AVC video encoding. It is an inherently lossless compression technique, like almost all entropy-coders. In...

5 KB (432 words) - 15:56, 17 November 2024

conditional entropy conditional quantum entropy confusion and diffusion cross-entropy data compression entropic uncertainty (Hirchman uncertainty) entropy encoding...

1 KB (93 words) - 09:42, 8 August 2023

compression ratio much better than 2:1 because of the intrinsic entropy of the data. Compression algorithms which provide higher ratios either incur very large...

6 KB (854 words) - 23:54, 25 April 2024

Lossless JPEG (redirect from JPEG Lossless Compression)

standards were limited in their compression performance. Total decorrelation cannot be achieved by first order entropy of the prediction residuals employed...

18 KB (2,433 words) - 23:09, 4 July 2025

Rate–distortion theory (category Data compression)

information theory which provides the theoretical foundations for lossy data compression; it addresses the problem of determining the minimal number of bits per...

15 KB (2,315 words) - 09:59, 31 March 2025