In machine learning, backpropagation is a gradient computation method commonly used for training a neural network in computing parameter updates. It is...

55 KB (7,843 words) - 22:21, 22 July 2025

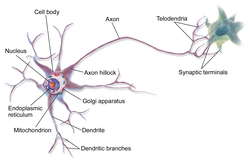

Neural backpropagation is the phenomenon in which, after the action potential of a neuron creates a voltage spike down the axon (normal propagation), another...

18 KB (2,262 words) - 01:19, 5 April 2024

multiplication remains the core, essential for backpropagation or backpropagation through time. Thus neural networks cannot contain feedback like negative...

21 KB (2,242 words) - 18:37, 19 July 2025

to Neural Networks (D. Kriesel) – Illustrated, bilingual manuscript about artificial neural networks; Topics so far: Perceptrons, Backpropagation, Radial...

168 KB (17,613 words) - 12:10, 26 July 2025

Backpropagation through time (BPTT) is a gradient-based technique for training certain types of recurrent neural networks, such as Elman networks. The...

6 KB (745 words) - 21:06, 21 March 2025

Vanishing gradients and exploding gradients, seen during backpropagation in earlier neural networks, are prevented by the regularization that comes from...

138 KB (15,555 words) - 03:37, 31 July 2025

the vanishing gradient problem of automatic differentiation or backpropagation in neural networks in 1992. In 1993, such a system solved a "Very Deep Learning"...

90 KB (10,414 words) - 07:48, 4 August 2025

Deep learning (redirect from Deep neural network)

Ostrovski et al. republished it in 1971. Paul Werbos applied backpropagation to neural networks in 1982 (his 1974 PhD thesis, reprinted in a 1994 book...

183 KB (18,116 words) - 23:26, 2 August 2025

Multilayer perceptron (category Neural network architectures)

distinguish data that is not linearly separable. Modern neural networks are trained using backpropagation and are colloquially referred to as "vanilla" networks...

16 KB (1,932 words) - 03:01, 30 June 2025

hardware and the development of the backpropagation algorithm, as well as recurrent neural networks and convolutional neural networks, renewed interest in ANNs...

85 KB (8,625 words) - 20:54, 10 June 2025

A residual neural network (also referred to as a residual network or ResNet) is a deep learning architecture in which the layers learn residual functions...

28 KB (3,042 words) - 20:18, 1 August 2025

paper published in 1986 that popularised the backpropagation algorithm for training multi-layer neural networks, although they were not the first to...

68 KB (5,888 words) - 05:45, 6 August 2025

action potential back towards the cell body. In some cells, however, neural backpropagation does occur through the dendritic branching and may have important...

23 KB (2,711 words) - 04:55, 28 April 2025

empirical risk minimization or backpropagation in order to fit some preexisting dataset. The term deep neural network refers to neural networks that have more...

8 KB (802 words) - 20:41, 9 June 2025

Romania: IEEE. Werbos, Paul J. (1994). The Roots of Backpropagation. From Ordered Derivatives to Neural Networks and Political Forecasting. New York, NY:...

12 KB (1,793 words) - 18:13, 30 June 2025

Backpropagation through structure (BPTS) is a gradient-based technique for training recursive neural networks, proposed in a 1996 paper written by Christoph...

808 bytes (76 words) - 17:48, 26 June 2025

Transformer (deep learning architecture) (redirect from Transformer (neural network))

(2017). "The Reversible Residual Network: Backpropagation Without Storing Activations". Advances in Neural Information Processing Systems. 30. Curran...

106 KB (13,107 words) - 01:38, 26 July 2025

gradient descent-based backpropagation (BP) is not available. SNNs have much larger computational costs for simulating realistic neural models than traditional...

33 KB (3,747 words) - 18:23, 18 July 2025

Neuroplasticity (redirect from Neural plasticity)

plasticity Brain training Environmental enrichment (neural) Neural adaptation Neural backpropagation Neuronal sprouting Neuroplastic effects of pollution...

121 KB (13,394 words) - 09:33, 18 July 2025

In the context of artificial neural networks, the rectifier or ReLU (rectified linear unit) activation function is an activation function defined as the...

23 KB (3,056 words) - 00:05, 21 July 2025

process of training artificial neural networks through backpropagation of errors. He also was a pioneer of recurrent neural networks. Werbos was one of the...

4 KB (281 words) - 15:06, 27 July 2025

Catastrophic interference (category Artificial neural networks)

catastrophic interference during two different experiments with backpropagation neural network modelling. Experiment 1: Learning the ones and twos addition...

36 KB (4,656 words) - 09:48, 1 August 2025

Artificial neuron (redirect from Node (neural networks))

weights. Neural networks also started to be used as a general function approximation model. The best known training algorithm called backpropagation has been...

31 KB (3,602 words) - 10:03, 29 July 2025

Graph neural networks (GNN) are specialized artificial neural networks that are designed for tasks whose inputs are graphs. One prominent example is molecular...

43 KB (4,802 words) - 14:49, 3 August 2025

Vanishing gradient problem (category Artificial neural networks)

and later layers encountered when training neural networks with backpropagation. In such methods, neural network weights are updated proportional to...

24 KB (3,711 words) - 14:28, 9 July 2025

consequence, the chances of field summation are slim. However, neural backpropagation, as a typically longer dendritic current dipole, can be picked up...

133 KB (15,909 words) - 15:40, 2 August 2025

function space U {\displaystyle {\mathcal {U}}} . Neural operators can be trained directly using backpropagation and gradient descent-based methods. Another...

16 KB (2,106 words) - 10:14, 13 July 2025

gradient is computed using backpropagation through structure (BPTS), a variant of backpropagation through time used for recurrent neural networks. The universal...

8 KB (911 words) - 17:50, 25 June 2025

Generative adversarial network (redirect from Generative adversarial neural network)

synthesized by the generator are evaluated by the discriminator. Independent backpropagation procedures are applied to both networks so that the generator produces...

95 KB (13,885 words) - 21:17, 2 August 2025

Almeida–Pineda recurrent backpropagation is an extension to the backpropagation algorithm that is applicable to recurrent neural networks. It is a type...

2 KB (207 words) - 19:06, 26 June 2025