In probability theory, the total variation distance is a statistical distance between probability distributions, and is sometimes called the statistical...

6 KB (785 words) - 23:05, 17 March 2025

such measures. However, when μ and ν are probability measures, the total variation distance of probability measures can be defined as ‖ μ − ν ‖ {\displaystyle...

25 KB (3,547 words) - 03:35, 10 January 2025

on the prior probability that our guess will be correct. Given the above definition of total variation distance, a sequence μn of measures defined on the...

18 KB (3,026 words) - 18:10, 7 April 2025

mixing time, is defined as the smallest t such that the total variation distance of probability measures is small: t mix ( ε ) = min { t ≥ 0 : max x ∈ S [ max...

5 KB (604 words) - 20:16, 9 July 2024

Lévy–Prokhorov metric (category Probability theory)

theorem Tightness of measures Weak convergence of measures Wasserstein metric Radon distance Total variation distance of probability measures Dudley 1989,...

5 KB (824 words) - 20:26, 8 January 2025

and hence these distances are not directly related to measures of distances between probability measures. Again, a measure of distance between random variables...

6 KB (643 words) - 02:01, 12 May 2025

trace distance serves as a direct quantum generalization of the total variation distance between probability distributions. Given two probability distributions...

9 KB (1,682 words) - 01:57, 25 February 2025

Wasserstein metric (redirect from Wasserstein distance)

space of all probability measures with bounded support. Hutchinson metric Lévy metric Lévy–Prokhorov metric Fréchet distance Total variation distance of probability...

32 KB (5,194 words) - 03:15, 15 May 2025

In probability and statistics, the Hellinger distance (closely related to, although different from, the Bhattacharyya distance) is used to quantify the...

10 KB (1,783 words) - 12:44, 4 March 2025

Kullback–Leibler divergence (redirect from Kullback-Leibler distance)

Q)} , is a type of statistical distance: a measure of how much a model probability distribution Q is different from a true probability distribution P....

77 KB (13,054 words) - 16:34, 16 May 2025

important statistical distances are integral probability metrics, including the Wasserstein-1 distance and the total variation distance. In addition to theoretical...

17 KB (1,880 words) - 14:34, 3 May 2024

Central tendency (redirect from Measures of central tendency)

central tendency (or measure of central tendency) is a central or typical value for a probability distribution. Colloquially, measures of central tendency...

13 KB (1,720 words) - 02:18, 19 January 2025

Isolation by distance (IBD) is a term used to refer to the accrual of local genetic variation under geographically limited dispersal. The IBD model is...

14 KB (1,717 words) - 07:08, 10 February 2025

F-divergence (redirect from Ali-Silvey distances)

probability distributions P {\displaystyle P} and Q {\displaystyle Q} . Many common divergences, such as KL-divergence, Hellinger distance, and total...

23 KB (3,992 words) - 03:25, 12 April 2025

moment problem Stieltjes moment problem Prior probability distribution Total variation distance Hellinger distance Wasserstein metric Lévy–Prokhorov metric...

11 KB (1,000 words) - 14:07, 2 May 2024

In probability theory and statistics, a probability distribution is a function that gives the probabilities of occurrence of possible events for an experiment...

48 KB (6,688 words) - 17:43, 6 May 2025

Jaccard index (redirect from Jaccard distance)

on the collection of all finite sets. There is also a version of the Jaccard distance for measures, including probability measures. If μ {\displaystyle...

25 KB (3,921 words) - 10:52, 11 April 2025

Jensen–Shannon divergence (redirect from Jensen-Shannon distance)

In probability theory and statistics, the Jensen–Shannon divergence, named after Johan Jensen and Claude Shannon, is a method of measuring the similarity...

16 KB (2,308 words) - 22:01, 14 May 2025

repeated applications of the Gilbert–Shannon–Reeds model. The total variation distance measures how similar or dissimilar two probability distributions are;...

9 KB (1,290 words) - 08:12, 4 May 2024

Genetic distance is a measure of the genetic divergence between species or between populations within a species, whether the distance measures time from...

39 KB (4,746 words) - 11:17, 3 May 2025

Kullback-Leibler divergence Total variation distance of probability measures Kolmogorov distance The strongest of these distances is the Kullback-Leibler...

22 KB (3,845 words) - 17:38, 16 April 2022

Semenovich Pinsker, is an inequality that bounds the total variation distance (or statistical distance) in terms of the Kullback–Leibler divergence. The inequality...

10 KB (2,106 words) - 16:58, 4 April 2025

index Total correlation Total least squares Total sum of squares Total survey error Total variation distance – a statistical distance measure TPL Tables –...

87 KB (8,280 words) - 23:04, 12 March 2025

function of bounded variation, also known as BV function, is a real-valued function whose total variation is bounded (finite): the graph of a function...

61 KB (8,441 words) - 20:55, 29 April 2025

the probability that two individuals from the total population are identical by descent. Using this definition, FST can be interpreted as measuring how...

24 KB (1,954 words) - 09:49, 30 March 2025

An index of qualitative variation (IQV) is a measure of statistical dispersion in nominal distributions. Examples include the variation ratio or the information...

88 KB (15,020 words) - 10:17, 10 January 2025

Subset simulation (category CS1 maint: DOI inactive as of November 2024)

variation distance of probability measures. Rare event sampling Curse of dimensionality Line sampling See Au & Wang for an introductory coverage of subset...

10 KB (1,505 words) - 01:10, 12 November 2024

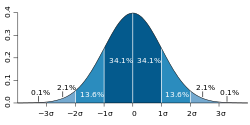

In probability theory and statistics, variance is the expected value of the squared deviation from the mean of a random variable. The standard deviation...

61 KB (10,215 words) - 11:05, 7 May 2025

In statistics, the standard deviation is a measure of the amount of variation of the values of a variable about its mean. A low standard deviation indicates...

59 KB (8,233 words) - 19:16, 23 April 2025

Entropy (information theory) (redirect from Entropy of a probability distribution)

This measures the expected amount of information needed to describe the state of the variable, considering the distribution of probabilities across...

72 KB (10,264 words) - 06:07, 14 May 2025