can be burned, but cannot be "unburned". The word 'entropy' has entered popular usage to refer to a lack of order or predictability, or of a gradual decline...

33 KB (5,257 words) - 11:59, 23 March 2025

Entropy is a scientific concept, most commonly associated with states of disorder, randomness, or uncertainty. The term and the concept are used in diverse...

111 KB (14,228 words) - 21:07, 24 May 2025

In information theory, an entropy coding (or entropy encoding) is any lossless data compression method that attempts to approach the lower bound declared...

4 KB (478 words) - 02:27, 14 May 2025

In information theory, the entropy of a random variable quantifies the average level of uncertainty or information associated with the variable's potential...

72 KB (10,220 words) - 13:03, 6 June 2025

an entropic force acting in a system is an emergent phenomenon resulting from the entire system's statistical tendency to increase its entropy, rather...

23 KB (2,595 words) - 10:33, 19 March 2025

In thermodynamics, the interpretation of entropy as a measure of energy dispersal has been exercised against the background of the traditional view, introduced...

19 KB (2,417 words) - 19:06, 2 March 2025

Negentropy (redirect from Negative entropy)

a measure of distance to normality. It is also known as negative entropy or syntropy. The concept and phrase "negative entropy" was introduced by Erwin...

11 KB (1,225 words) - 18:26, 10 June 2025

entropy states that the probability distribution which best represents the current state of knowledge about a system is the one with largest entropy,...

31 KB (4,196 words) - 11:16, 14 June 2025

Measure-preserving dynamical system (redirect from Kolmogorov entropy)

introduction, with exercises, and extensive references.) Lai-Sang Young, "Entropy in Dynamical Systems" (pdf; ps), appearing as Chapter 16 in Entropy...

23 KB (3,592 words) - 05:13, 10 May 2025

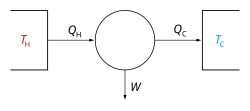

statement Carnot's theorem (thermodynamics) Carnot heat engine Introduction to entropy Clausius theorem at Wolfram Research Mortimer, R. G. Physical Chemistry...

15 KB (2,694 words) - 21:47, 28 December 2024

Gibbs entropy from classical statistical mechanics to quantum statistical mechanics, and it is the quantum counterpart of the Shannon entropy from classical...

35 KB (5,061 words) - 13:27, 1 March 2025

define a group of physical quantities, such as temperature, energy, and entropy, that characterize thermodynamic systems in thermodynamic equilibrium....

20 KB (2,893 words) - 08:03, 9 May 2025

Kullback–Leibler divergence (redirect from Kullback–Leibler entropy)

statistics, the Kullback–Leibler (KL) divergence (also called relative entropy and I-divergence), denoted D KL ( P ∥ Q ) {\displaystyle D_{\text{KL}}(P\parallel...

77 KB (13,067 words) - 13:07, 12 June 2025

Rényi entropy is a quantity that generalizes various notions of entropy, including Hartley entropy, Shannon entropy, collision entropy, and min-entropy. The...

22 KB (3,526 words) - 01:18, 25 April 2025

similar to the mathematics of statistical thermodynamics worked out by Ludwig Boltzmann and J. Willard Gibbs in the 1870s, in which the concept of entropy is...

29 KB (3,734 words) - 15:19, 27 March 2025

Second law of thermodynamics (redirect from Law of Entropy)

second law may be formulated by the observation that the entropy of isolated systems left to spontaneous evolution cannot decrease, as they always tend...

107 KB (15,472 words) - 01:32, 4 May 2025

In statistical mechanics, Boltzmann's entropy formula (also known as the Boltzmann–Planck equation, not to be confused with the more general Boltzmann...

13 KB (1,572 words) - 21:53, 22 May 2025

High-entropy alloys (HEAs) are alloys that are formed by mixing equal or relatively large proportions of (usually) five or more elements. Prior to the...

105 KB (12,696 words) - 04:09, 2 June 2025

Information theory (category Computer-related introductions in 1948)

and electrical engineering. A key measure in information theory is entropy. Entropy quantifies the amount of uncertainty involved in the value of a random...

64 KB (7,973 words) - 23:39, 4 June 2025

Information (redirect from Introduction to Information theory)

representation, and entropy. Information is often processed iteratively: Data available at one step are processed into information to be interpreted and...

41 KB (4,788 words) - 16:03, 3 June 2025

Black hole thermodynamics (redirect from Black hole entropy)

holes carried no entropy, it would be possible to violate the second law by throwing mass into the black hole. The increase of the entropy of the black hole...

25 KB (3,223 words) - 06:04, 1 June 2025

Holographic principle (redirect from Holographic entropy bound)

phones to modems to hard disk drives and DVDs, rely on Shannon entropy. In thermodynamics (the branch of physics dealing with heat), entropy is popularly...

32 KB (3,969 words) - 23:05, 17 May 2025

Research concerning the relationship between the thermodynamic quantity entropy and both the origin and evolution of life began around the turn of the...

63 KB (8,442 words) - 00:31, 23 May 2025

Entropic gravity, also known as emergent gravity, is a theory in modern physics that describes gravity as an entropic force—a force with macro-scale homogeneity...

28 KB (3,736 words) - 06:45, 9 May 2025

Thermodynamics (section Introduction)

physics that deals with heat, work, and temperature, and their relation to energy, entropy, and the physical properties of matter and radiation. The behavior...

48 KB (5,843 words) - 18:33, 10 June 2025

In classical thermodynamics, entropy (from Greek τρoπή (tropḗ) 'transformation') is a property of a thermodynamic system that expresses the direction...

17 KB (2,587 words) - 15:45, 28 December 2024

In thermodynamics, the entropy of mixing is the increase in the total entropy when several initially separate systems of different composition, each in...

30 KB (4,666 words) - 04:09, 10 June 2025

Differential entropy (also referred to as continuous entropy) is a concept in information theory that began as an attempt by Claude Shannon to extend the...

23 KB (2,842 words) - 23:31, 21 April 2025

Entropy is one of the few quantities in the physical sciences that require a particular direction for time, sometimes called an arrow of time. As one...

34 KB (5,136 words) - 15:56, 28 February 2025

H {\textstyle H} is the enthalpy of the system S {\textstyle S} is the entropy of the system T {\textstyle T} is the temperature of the system V {\textstyle...

33 KB (4,550 words) - 17:40, 5 June 2025