particularly information theory, the conditional mutual information is, in its most basic form, the expected value of the mutual information of two random...

11 KB (2,385 words) - 15:00, 16 May 2025

In probability theory and information theory, the mutual information (MI) of two random variables is a measure of the mutual dependence between the two...

56 KB (8,848 words) - 15:24, 16 May 2025

In information theory, the conditional entropy quantifies the amount of information needed to describe the outcome of a random variable Y {\displaystyle...

11 KB (2,142 words) - 15:57, 16 May 2025

interpretation in algebraic topology. The conditional mutual information can be used to inductively define the interaction information for any finite number of variables...

17 KB (2,426 words) - 18:37, 28 January 2025

)}{\bigr ]}\end{aligned}}} The score uses the conditional mutual information and the mutual information to estimate the redundancy between the already...

58 KB (6,925 words) - 07:55, 26 April 2025

generalization of quantities of information to continuous distributions), and the conditional mutual information. Also, pragmatic information has been proposed as...

64 KB (7,976 words) - 13:50, 10 May 2025

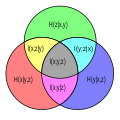

of the information content of random variables and a measure over sets. Namely the joint entropy, conditional entropy, and mutual information can be considered...

12 KB (1,762 words) - 22:37, 8 November 2024

expressed as special cases of a single inequality involving the conditional mutual information, namely I ( A ; B | C ) ≥ 0 , {\displaystyle I(A;B|C)\geq 0...

13 KB (1,850 words) - 21:10, 14 April 2025

Transfer entropy (category Entropy and information)

entropy measures such as Rényi entropy. Transfer entropy is conditional mutual information, with the history of the influenced variable Y t − 1 : t − L...

10 KB (1,293 words) - 17:15, 7 July 2024

Squashed entanglement (category Quantum information science)

( A : B | Λ ) {\displaystyle S(A:B|\Lambda )} , the quantum Conditional Mutual Information (CMI), below. A more general version of Eq.(1) replaces the...

14 KB (2,131 words) - 07:38, 22 July 2024

Redundancy (information theory). The characterization here imposes an additive property with respect to a partition of a set. Meanwhile, the conditional probability...

72 KB (10,264 words) - 06:07, 14 May 2025

measures of information: entropy, joint entropy, conditional entropy and mutual information. Information diagrams are a useful pedagogical tool for teaching...

3 KB (494 words) - 06:20, 4 March 2024

Entropy rate (redirect from Source information rate)

or source information rate is a function assigning an entropy to a stochastic process. For a strongly stationary process, the conditional entropy for...

5 KB (784 words) - 18:08, 6 November 2024

Cross-entropy (category Entropy and information)

method Logistic regression Conditional entropy Kullback–Leibler distance Maximum-likelihood estimation Mutual information Perplexity Thomas M. Cover,...

19 KB (3,264 words) - 23:00, 21 April 2025

i | Y i − 1 ) {\displaystyle I(X^{i};Y_{i}|Y^{i-1})} is the conditional mutual information I ( X 1 , X 2 , . . . , X i ; Y i | Y 1 , Y 2 , . . . , Y i...

18 KB (3,106 words) - 05:37, 7 April 2025

Z {\displaystyle Z} are conditionally independent, given Y {\displaystyle Y} , which means the conditional mutual information, I ( X ; Z ∣ Y ) = 0 {\displaystyle...

3 KB (439 words) - 16:21, 22 August 2024

Channel capacity (redirect from Information capacity)

of the channel, as defined above, is given by the maximum of the mutual information between the input and output of the channel, where the maximization...

26 KB (4,845 words) - 09:57, 31 March 2025

Joint entropy (category Entropy and information)

(Y|X)+\mathrm {H} (X)} . Joint entropy is also used in the definition of mutual information: 21 I ( X ; Y ) = H ( X ) + H ( Y ) − H ( X , Y ) {\displaystyle...

6 KB (1,159 words) - 16:23, 16 May 2025

Rate–distortion theory (category Information theory)

{\displaystyle X} , and I Q ( Y ; X ) {\displaystyle I_{Q}(Y;X)} is the mutual information between Y {\displaystyle Y} and X {\displaystyle X} defined as I (...

15 KB (2,327 words) - 09:59, 31 March 2025

Shannon–Hartley theorem (category Information theory)

In information theory, the Shannon–Hartley theorem tells the maximum rate at which information can be transmitted over a communications channel of a specified...

21 KB (3,087 words) - 20:03, 2 May 2025

The differential analogies of entropy, joint entropy, conditional entropy, and mutual information are defined as follows: h ( X ) = − ∫ X f ( x ) log ...

12 KB (2,183 words) - 15:35, 22 December 2024

Shannon's source coding theorem (category Information theory)

In information theory, Shannon's source coding theorem (or noiseless coding theorem) establishes the statistical limits to possible data compression for...

12 KB (1,881 words) - 21:05, 11 May 2025

Limiting density of discrete points (category Information theory)

In information theory, the limiting density of discrete points is an adjustment to the formula of Claude Shannon for differential entropy. It was formulated...

6 KB (966 words) - 18:36, 24 February 2025

package for computing all information distances and volumes, multivariate mutual information, conditional mutual information, joint entropies, total correlations...

9 KB (1,375 words) - 03:56, 31 July 2024

Asymptotic equipartition property (category Information theory)

{\displaystyle I_{P}:=-\ln \mu (P(x))} Similarly, the conditional information of partition P {\textstyle P} , conditional on partition Q {\textstyle Q} , about x {\textstyle...

23 KB (3,965 words) - 09:57, 31 March 2025

classical intuition, except that quantum conditional entropies can be negative, and quantum mutual informations can exceed the classical bound of the marginal...

29 KB (4,718 words) - 20:21, 16 April 2025

In probability theory, conditional probability is a measure of the probability of an event occurring, given that another event (by assumption, presumption...

33 KB (4,706 words) - 21:24, 6 March 2025

trees, the term is sometimes used synonymously with mutual information, which is the conditional expected value of the Kullback–Leibler divergence of...

21 KB (3,026 words) - 12:35, 17 December 2024

In information theory and communication, the Slepian–Wolf coding, also known as the Slepian–Wolf bound, is a result in distributed source coding discovered...

5 KB (623 words) - 21:24, 18 September 2022

Differential entropy (category Entropy and information)

significance as a measure of discrete information since it is actually the limit of the discrete mutual information of partitions of X {\displaystyle X}...

23 KB (2,842 words) - 23:31, 21 April 2025