Rectifier (neural networks) (redirect from ReLU)

In the context of artificial neural networks, the rectifier or ReLU (rectified linear unit) activation function is an activation function defined as the...

17 KB (2,279 words) - 15:23, 30 April 2024

recognition model developed by Hinton et al, the ReLU used in the 2012 AlexNet computer vision model and in the 2015 ResNet model. Aside from their empirical performance...

20 KB (1,644 words) - 07:29, 10 May 2024

Lp functions is exactly max{dx + 1, dy} (for a ReLU network). More generally this also holds if both ReLU and a threshold activation function are used....

37 KB (5,026 words) - 07:52, 8 May 2024

Convolutional neural network (section ReLU layer)

usually the Frobenius inner product, and its activation function is commonly ReLU. As the convolution kernel slides along the input matrix for the layer, the...

132 KB (14,846 words) - 23:17, 16 May 2024

convolutional layer (with ReLU activation) RN = local response normalization MP = maxpooling FC = fully connected layer (with ReLU activation) Linear = fully...

9 KB (982 words) - 22:20, 23 March 2024

\cdot ^{T}} indicates transposition, and LeakyReLU {\displaystyle {\text{LeakyReLU}}} is a modified ReLU activation function. Attention coefficients are...

34 KB (3,874 words) - 03:59, 4 May 2024

an input and output shape nn.ReLU(), # ReLU is one of many activation functions provided by nn nn.Linear(512, 512), nn.ReLU(), nn.Linear(512, 10), ) def...

12 KB (1,161 words) - 10:13, 10 May 2024

representations with the ReLu function: min ( x , y ) = x − ReLU ( x − y ) = y − ReLU ( y − x ) . {\displaystyle \min(x,y)=x-\operatorname {ReLU} (x-y)=y-\operatorname...

31 KB (6,060 words) - 21:56, 5 April 2024

replaces tanh with the ReLU activation, and applies batch normalization (BN): z t = σ ( BN ( W z x t ) + U z h t − 1 ) h ~ t = ReLU ( BN ( W h x t )...

8 KB (1,280 words) - 07:32, 10 May 2024

the ReLU function pointwise. Thus, it can be viewed as a smoothing function which nonlinearly interpolates between a linear function and the ReLU function...

4 KB (454 words) - 06:04, 22 July 2023

oscillating activation functions with multiple zeros that outperform sigmoidal and ReLU-like activation functions on many tasks have also been recently explored...

31 KB (3,585 words) - 04:54, 19 April 2024

each node (coordinate), but today is more varied, with rectifier (ramp, ReLU) being common. a j l {\displaystyle a_{j}^{l}} : activation of the j {\displaystyle...

54 KB (7,493 words) - 10:45, 15 May 2024

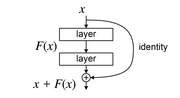

Residual neural network (redirect from ResNets)

applications through the use of GPUs to perform parallel computations and ReLU ("Rectified Linear Unit") layers to speed up gradient descent. In 2014, VGGNet...

25 KB (2,828 words) - 03:31, 4 May 2024

.lu is the Internet country code top-level domain (ccTLD) for Luxembourg. .lu domains are administered by RESTENA. Since 1 February 2010, the administrative...

3 KB (211 words) - 19:15, 24 February 2024

known as the positive part. In machine learning, it is commonly known as a ReLU activation function or a rectifier in analogy to half-wave rectification...

7 KB (974 words) - 01:04, 2 March 2024

France ISO 3166-2:RE ".RE: the internet of Réunion". Afnic. Retrieved 23 February 2023. "How would I go about getting a domain like lu.re?". Server Fault...

4 KB (357 words) - 15:02, 23 February 2023

network. Specifically, each gating is a linear-ReLU-linear-softmax network, and each expert is a linear-ReLU network. The key design desideratum for MoE...

32 KB (4,489 words) - 11:20, 26 April 2024

(16-bit integers) and W2, W3 and W4 (8-bit). It has 4 fully-connected layers, ReLU activation functions, and outputs a single number, being the score of the...

4 KB (336 words) - 02:32, 4 May 2024

repeated application of convolutions, each followed by a rectified linear unit (ReLU) and a max pooling operation. During the contraction, the spatial information...

9 KB (1,045 words) - 12:41, 10 May 2024

Lu Xun (Chinese: 鲁迅; Wade–Giles: Lu Hsün; 25 September 1881 – 19 October 1936), born Zhou Zhangshou, was a Chinese writer, literary critic, lecturer,...

56 KB (7,279 words) - 18:53, 14 May 2024

meant to mimic the sound of the drum, and an accompanying lyric, "tu-re-lu-re-lu," the flute. This is similar conceptually to the carol "The Little Drummer...

5 KB (663 words) - 14:38, 3 January 2024

is now heavily used in computer vision. In 1969 Fukushima introduced the ReLU (Rectifier Linear Unit) activation function in the context of visual feature...

9 KB (776 words) - 23:28, 1 May 2024

models). In recent developments of deep learning the rectified linear unit (ReLU) is more frequently used as one of the possible ways to overcome the numerical...

21 KB (2,320 words) - 06:33, 28 April 2024

2014, The LuLu Sessions makes its US broadcast premiere as part of the America ReFramed Documentary Series on PBS and World Channel. The LuLu Sessions...

7 KB (677 words) - 07:43, 31 December 2023

白梦妍; born 23 September 1994), known professionally by her stage name Bai Lu (Chinese: 白鹿), is a Chinese actress, model and singer. She is best known for...

41 KB (2,977 words) - 04:59, 21 May 2024

neural networks with ReLU activation functions) VeriNet (Symbolic Interval Propagation-based verification of neural networks with ReLU activation functions)...

8 KB (671 words) - 23:14, 18 August 2023

pre-training, and then moved towards supervision again with the advent of dropout, ReLU, and adaptive learning rates. During the learning phase, an unsupervised...

27 KB (2,371 words) - 02:14, 13 May 2024

Lu was a Mexican pop duo formed by Mario Sandoval and Paty Cantú, both from Guadalajara, Mexico. The sound of their music is similar to Aleks Syntek's...

6 KB (284 words) - 23:57, 30 November 2023

ReLU ( W 2 T ReLU ( W 1 T x ) + b 1 ) + b 2 ) . {\displaystyle {\begin{aligned}\mathbf {Z} _{2}&={\text{ReLU}}{\big (}\mathbf {W} _{2}^{T}\;{\text{ReLU}}(\mathbf...

38 KB (6,082 words) - 07:44, 18 February 2024

neuron activation function used in neural networks, usually referred to as an ReLU Relative light unit, a unit for measuring cleanliness by measuring the levels...

462 bytes (99 words) - 16:44, 6 April 2020